Text Moderation - Overview

Introduction

V3 (Demo) vs V2 (Enterprise) at a glance

- V3 API — easiest to try today in the Playground.

For developer testing ONLY: 100 requests/day limit. Great UI for testing and proofs-of-concept.

Note: Text Moderation requires an annual contract. Smaller customers should use our self-serveVLM. - V2 API — Enterprise project with dedicated API keys, higher limits, and additional stats.

Try the Playground now. See the API tab there for code samples.Need higher volume? Contact us and we’ll increase your V3 limits or create an Enterprise project.

How Text Moderation works

Hive offers text moderation across categories such as sexual, hate, violence, bullying, promotions, and external links. We support ~30 languages, and our models also understand emojis.

We take a two-pronged approach:

- A deep learning-based text classification model to moderate text based on semantic meaning.

- Rule-based pattern-matching algorithms to flag specific words and phrases.

The text classification model is trained on a proprietary large corpus of labeled data across multiple domains (including but not limited to: social media, chat, and livestreaming apps), and is able to interpret full sentences with linguistic subtleties. Pattern-matching algorithms will search sentences for a set of predefined patterns that are commonly associated with harmful content. We also offer users options to add their own rules to this.

Both our models and pattern-matching approaches are robust to and explicitly tested on character replacements, character duplication, leetspeak, misspellings, and other adversarial behavior.

The max input size is 1024 characters, calculated via UTF-16 code units.

Quickstart (V2)

An example cURL request for this API is shown below. For more information, see the V2 Sync API and Async API guides.

# Submit a task with text (sync)

curl --request POST \

--url https://api.thehive.ai/api/v2/task/sync \

--header 'Authorization: Token <API_KEY>' \

--form 'text_data="Hi there, this is a test task"'

# Submit a task with a specified language (see below for supported languages)

curl --request POST \

--url https://api.thehive.ai/api/v2/task/sync \

--header 'Authorization: Token <API_KEY>' \

--form 'text_data="Hi there, this is a test task"' \

--form 'options="{\"input_language\":\"es\"}"'

If integrating with V3 API, check out the API tab on the Playground (default limit 100/day).

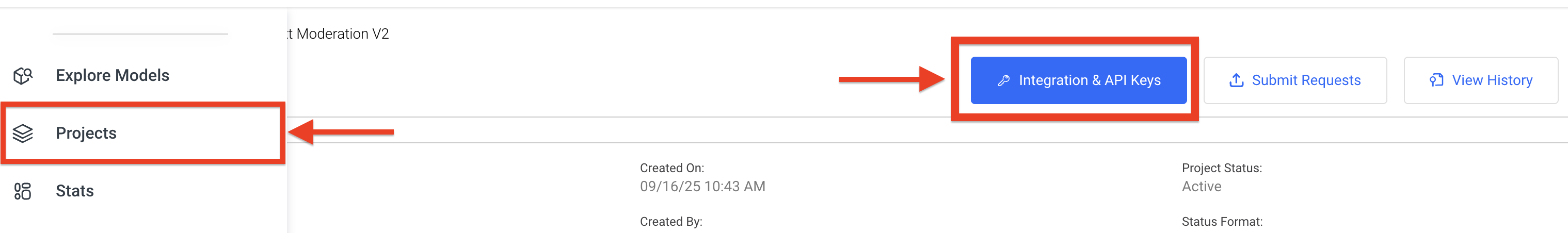

To find your API key, go to your dedicated projects, find the Text Moderation project, and click the "Integration & API Keys" button.

For a more comprehensive API integration guide, plus example model responses, visit this guide.

Text Classification Details

Multilevel Classes

Note: V3 returns the same class names and scores as V2, so your threshold logic stays unchanged.

Our multilevel classes output different levels of moderation to empower our customers to make more refined moderation decisions. We offer five heads (sexual, hate, violence, bullying, drugs) that contain four classes each and five heads (child exploitation, gibberish, spam, promotions, phone numbers) that contains two classes. Our classes are ordered by severity ranging from level 3 (most severe) to level 0 (benign). If a certain head is not supported for a given language then you will receive a score of -1.

- 3: Intercourse, masturbation, porn, sex toys and genitalia

- 2: Sexual intent, nudity and lingerie

- 1: Informational statements that are sexual in nature, affectionate activities (kissing, hugging, etc.), flirting, pet names, relationship status, sexual insults and rejecting sexual advances

- 0: The text does not contain any of the above

- -1: Language of input is not supported

- 3: Slurs, hate speech, promotion of hateful ideology

- 2: Negative stereotypes or jokes, degrading comments, denouncing slurs, challenging a protected group's morality or identity, violence against religion

- 1: Positive stereotypes, informational statements, reclaimed slurs, references to hateful ideology, immorality of protected group's rights

- 0: The text does not contain any of the above

- -1: Language of input is not supported

- 3: Serious and realistic threats, mentions of past violence

- 2: Calls for violence, destruction of property, calls for military action, calls for the death penalty outside a legal setting, mentions of self-harm/suicide

- 1: Denouncing acts of violence, soft threats (kicking, punching, etc.), violence against non-human subjects, descriptions of violence, gun usage, abortion, self-defense, calls for capital punishment in a legal setting, destruction of small personal belongings, violent jokes

- 0: The text does not contain any of the above

- -1: Language of input is not supported

- 3: Slurs or profane descriptors toward specific individuals, encouraging suicide or severe self-harm, severe violent threats toward specific individuals

- 2: Non-profane insults toward specific individuals, encouraging non-severe self-harm, non-severe violent threats toward specific individuals, silencing or exclusion

- 1: Profanity in a non-bullying context, playful teasing, self-deprecation, reclaimed slurs, degrading a person's belongings, bullying toward organizations, denouncing bullying

- 0: The text does not contain any of the above

- -1: Language of input is not supported

- 3: Descriptions of the acquisition of drugs and text that explicitly promotes, advertises, or encourages drug use

- 2: References to past drug acquisition or use as well as descriptions of recreational use that do not promote drugs to others

- 1: Language around drugs that is neutral or informational, discouraging, or ambiguous in meaning

- 0: The text does not contain any of the above

- -1: Language of input is not supported

- 3: Buying, selling, trading, and constructing bombs and firearms

- 2: Buying, selling, trading, and constructing non-explosive weapons

- 1: Neutral mentions of all weapons

- 0: The text does not contain any of the above

- -1: Language of input is not supported

Binary Classes

- 3: Asking for or trading child pornography(cp) or related links, mentioning proclivity for cp, identifiably underage users soliciting sex or pornography, roleplay involving children, mentions of sexual activity or sexual fetishes involving children

- 0: The text does not include any of the above

- -1: Language of input is not supported

- 3: Content that contains a direct or indirect threat of physical violence to children in a school or school-related setting

- 0: The text does not include any of the above

- -1: Language of input is not supported

- 3: Content related to promoting, planning, or carrying out suicide, non-suicidal self harm (cutting, burning, etc.), and behaviors associated with eating disorders

- 0: The text does not include any of the above

- -1: Language of input is not supported

- 3: keyboard spam and phrases or words that are completely incomprehensible (Ex: "kgvjbwklrgjb", "ef2$gt rgbu").

- 0: The text does not include the above

- -1: Language of input is not supported

- 3: The text is intended to redirect a user to a different platform, including email addresses, phone numbers, and certain links

- 0: The text does not include the above OR includes a link to a allowlisted domain (i.e., popular, reputable sites)

- -1: Language of input is not supported

- 3: Asking for likes/follows/shares, advertising monthly newsletters/special promotions, asking for donations/payments, advertising products, selling pornography, giveaways

- 0: The text does not include the above

- -1: Language of input is not supported

- 3: The text is intended to redirect to or encourage the use of a specific social media platform, website, or app

- 0: The text does not include the above

- -1: Language of input is not supported

- 3: The text includes a phone number

- 0: The text does not include a phone number

- 3: The text contains explicit or definite mention of a minor

- 0: The text does not contain explicit or definite mention of a minor

- 3: The text contains implicit or likely mention of a minor

- 0: The text does not contain implicit or likely mention of a minor

- 3: The text contains strings that describe sexual imagery. This class is especially useful for catching generative AI prompts intended to create sexual images.

- 0: The text does not contain strings that describe sexual imagery.

- 3: The text contains strings that describe violent imagery where a living being has been harmed. This class is especially useful for catching generative AI prompts intended to create violent images.

- 0: The text does not contain strings that describe violent imagery.

- 3: This text contains implications or encouragement of self-harm, i.e., any form of deliberate injury to one's self.

- 0: This text does not contain implications or encouragement of self-harm.

Supported Languages

The API returns the classified language for each request, using ISO 639-1 language codes. The response will indicate which classes were moderated based on the supported languages below. Classes for supported languages will return standard confidence scores, while classes for non-supported languages will return -1 under those class scores. Unsupported languages and non-language inputs — such as code or gibberish inputs — will be classified as an 'unsupported' language.

| Sexual | Violence | Hate | Bullying | Promotions | Spam | Phone Numbers | Child Exploitation | Child Safety | Drugs | Gibberish | Self Harm | Weapons | Minor Presence (Explicit) | Minor Presence (Implicit) | Redirection | Sexual Description | Violent Description | Self Harm Intent | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| English | Model | Model | Model | Model | Model | Pattern Match | Model | Model | Model | Model | Model | Model | Model | Model (Beta) | Model (Beta) | Model | Model (Beta) | Model (Beta) | Model (Beta) |

| Spanish | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | Model (Beta) | - | Model (Beta) | Model (Beta) | - | - | - | - | - | - |

| Hindi | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| French | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | Model (Beta) | - | Model (Beta) | Model (Beta) | - | - | - | - | - | - |

| Portuguese | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | Model (Beta) | - | Model (Beta) | Model (Beta) | - | - | - | - | - | - |

| Arabic | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| German | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | Model (Beta) | - | Model (Beta) | Model (Beta) | - | - | - | - | - | - |

| Italian | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | Model (Beta) | - | - | - | - | - | - | - |

| Turkish | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Chinese | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Russian | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Dutch | Model (Beta) | Model (Beta) | Model | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | Model (Beta) | - | Model (Beta) | Model (Beta) | - | - | - | - | - | - |

| Korean | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Japanese | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Vietnamese | Model | Model | - | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Romanian | Model (Beta) | Model (Beta) | - | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Polish | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | Model (Beta) | - | Model (Beta) | Model (Beta) | - | - | - | - | - | - |

| Indonesian | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Tagalog | Model | Model | Model | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Persian | Model (Beta) | Model (Beta) | - | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Norwegian | Model | Model (Beta) | - | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Czech | Model (Beta) | Model (Beta) | - | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Danish | Model (Beta) | Model (Beta) | Model | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Estonian | Model (Beta) | Model (Beta) | - | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Finnish | Model (Beta) | Model (Beta) | Model | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | Model (Beta) | - | Model (Beta) | Model (Beta) | - | - | - | - | - | - |

| Greek | Model (Beta) | Model (Beta) | - | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Hungarian | Model (Beta) | Model (Beta) | - | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Swedish | Model (Beta) | Model (Beta) | Model | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | Model (Beta) | - | Model (Beta) | Model (Beta) | - | - | - | - | - | - |

| Thai | Model | Model | - | Model | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Ukrainian | Model (Beta) | Model (Beta) | - | Model (Beta) | - | Pattern Match | Model | - | Model (Beta) | - | - | - | - | - | - | - | - | - | - |

| Haitian Creole | Model (Beta) | Model (Beta) | - | Model (Beta) | - | - | Model (Beta) | - | Model (Beta) | - | - | Model (Beta) | - | - | - | - | - | - | - |

Pattern-Matching Algorithms

Hive's string pattern-matching algorithms return the filter type that was matched, the substring that matched the filters, as well as the position of the substring in the text input from the API request.

The string pattern-matching algorithms currently support the following categories:

Filter Type | Description |

|---|---|

Profanity |

|

Likeness (Beta) |

|

Celebrity (Beta) |

|

Personal Identifiable Information (PII) |

|

Custom (User-Provided) |

|

Listed below are the exact type fields returned in text_filters:

- Profanity:

profanity - Likeness:

likeness - Celebrity:

celebritypoliticianathletereligious_figure

Listed below are the exact type fields returned in pii_entities:

- Personal Identifiable Information (PII)

Email AddressU.S. Mailing AddressIP AddressU.S. Phone NumberInternational Phone NumberU.S. Social Security Number (SSN)Credit Card NumberAge

Internet Watch Foundation Keyword and URL Lists

Hive has partnered with the Internet Watch Foundation (IWF), a non-profit organization working to stop child sexual abuse online. As part of our partnership, Hive will now include integrating their proprietary keyword and URL lists into our default Text Moderation model for all customers at no additional cost. These lists are dynamic and are updated daily.

- Keyword List: This wordlist contains known terms and code words that offenders use to exchange child sexual abuse material (CSAM) in a discreet manner.

- URL List: This wordlist contains a comprehensive list of webpages that are confirmed to host CSAM in image or video form.

Only matching items will be returned within your response. We currently support the following categories:

Filter Type | Description |

|---|---|

iwf_url_list_match |

|

iwf_keyword_list_single_match |

|

iwf_keyword_list_multi_match |

|

Adding Custom Classes to Pattern-Matching

Custom (user-provided) text filters can be configured and maintained directly by the API User from the API Dashboard. A user can have one or more custom class. Each custom class can have one or more custom text matches containing a list of words that should be matched under that class.

For example:

| Custom Class | Custom Text Matches |

|---|---|

| Nationality | American, Canadian, Chinese, ... |

| Race | African American, Asian, Hispanic, ... |

Users can select the Detect Subwords box if they would like any of their custom text matches to be detected whenever they are part of a larger string. For example, if the word class was part of the custom text matches, then the word classification would be flagged as well.

Character substitution is another feature that Hive offers which can be especially helpful when flagging leetspeak. A user can define the characters which they would like to be substituted in the left column of the Custom Character Substitution table. They will then create a list of comma separated replacements in the right column of the table.

For example:

| Characters to be substituted | Substitution Strings (Comma separated) |

|---|---|

| o | 0,(),@ |

| s | $ |

Based on the previous example, we would flag any input that would match a string in Custom Text Matches after substituting all occurrences of 0, (), @ with o and all $ with s.

Adding Custom Allowlists

Custom allowlists permit users to prevent our models from flagging certain text content. Each custom allowlist can have one or more allowlist strings that our model will not analyze.

For example:

| Allowlist Name | Allowlist Strings |

|---|---|

| URLs | facebook.com, google.com, youtube.com |

| Names | Andrew, Jack, Ben |

Users can select the Detect Subwords box if they would like any of their allowlist strings to be ignored when part of a larger string. For example, if the word cup was part of allowlist strings, then cup would be ignored in cupcakes. If Detect Subwords is selected, another option called Allow Entire Subword will appear. If Allow Entire Subword is selected then in the scenario above the entire word cupcakes would be ignored.

NOTE:Many widely-used, reputable websites are automatically allowlisted by default. However, you can follow the steps below to allowlist additional domains as needed.

Selecting Detect Inside URLs will enable the text filter to ignore any string that is part of the allowlist even if it part of a URL. For example, if google.com is in the allowlist then it will be ignored when it is part of https://www.google.com/search?q=something. If Detect Inside URLs is selected, another box titled Allow Entire URL appears. When Allow Entire URL is selected, if a URL contains any words in the allowlist then the entire URL will be ignored. Adding domains to an allowlist and selecting the Detect Inside URLs and Allow Entire URL options will prevent URLs with those domains from being flagged as spam.

Updated 15 days ago

See the API reference for more details on the API interface and response format.