Using Hive's Visual Moderation API

Welcome to Hive's Visual Moderation! This guide will help you configure your project, integrate our API, and understand our model responses.

Note: If you're just evaluating our Visual Moderation API, you can start instantly with theV3 Playground (100 req/day limit) and upgrade to a V2 Enterprise project when you need higher volume.

If you've already spoken to our Sales team, this is the guide you'll follow to integrate your project.

First Steps (Enterprise)

Once we've created a dedicated project for you, follow these steps to open it and grab your API Key:

- Log in to your Hive account.

- Locate the Products dropdown in the header, and go to the Models tab. This is where you will find all your Projects.

- Choose your "Visual Moderation" Project to open up its dashboard.

If you don't see your project, reach out to us using our contact form and we'll be happy to enable it for you.

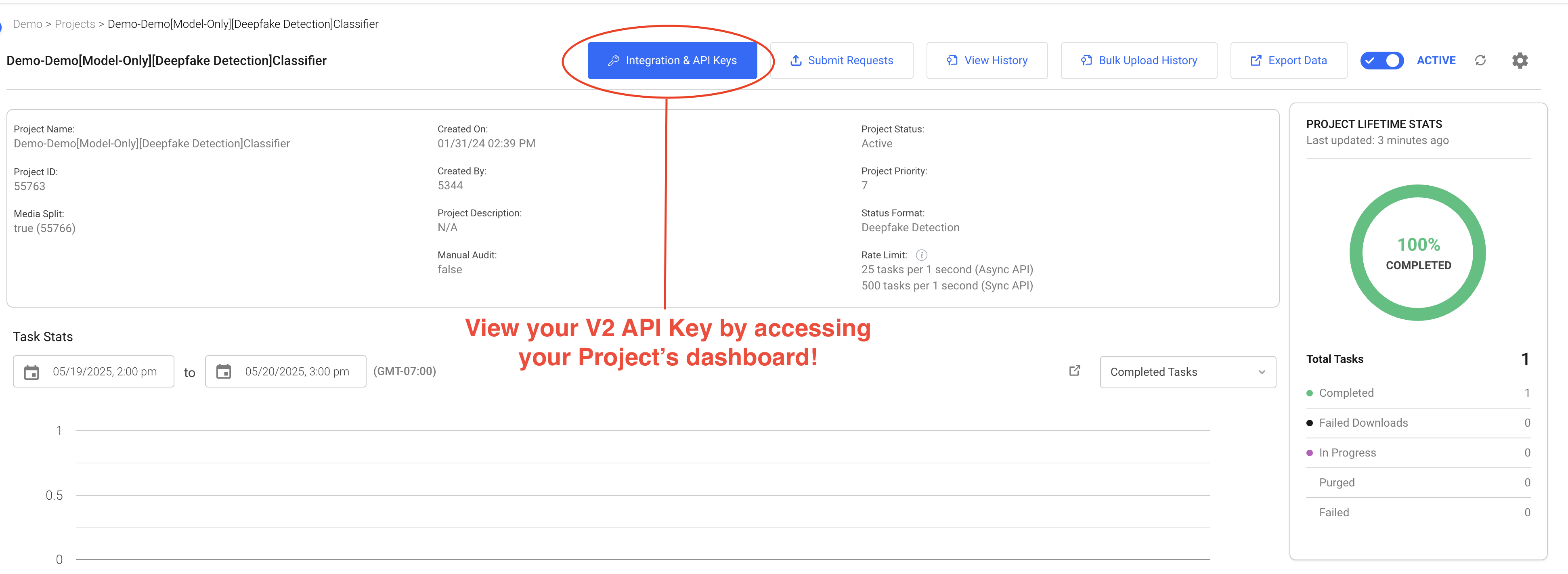

Find Your API Key

Once you have a visual moderation project with Hive, you'll need your API Key. The API Key is a unique authorization token for your project’s endpoint, and you will need to include the API Key as a header in each request made to the API in order to submit tasks for classification.

Tasks are billed to whichever project they are submitted under, so protect your API Key like you would a password! If you have multiple Hive projects, the API Key for each endpoint will be unique. Be sure you are submitting your content to the correct models.\

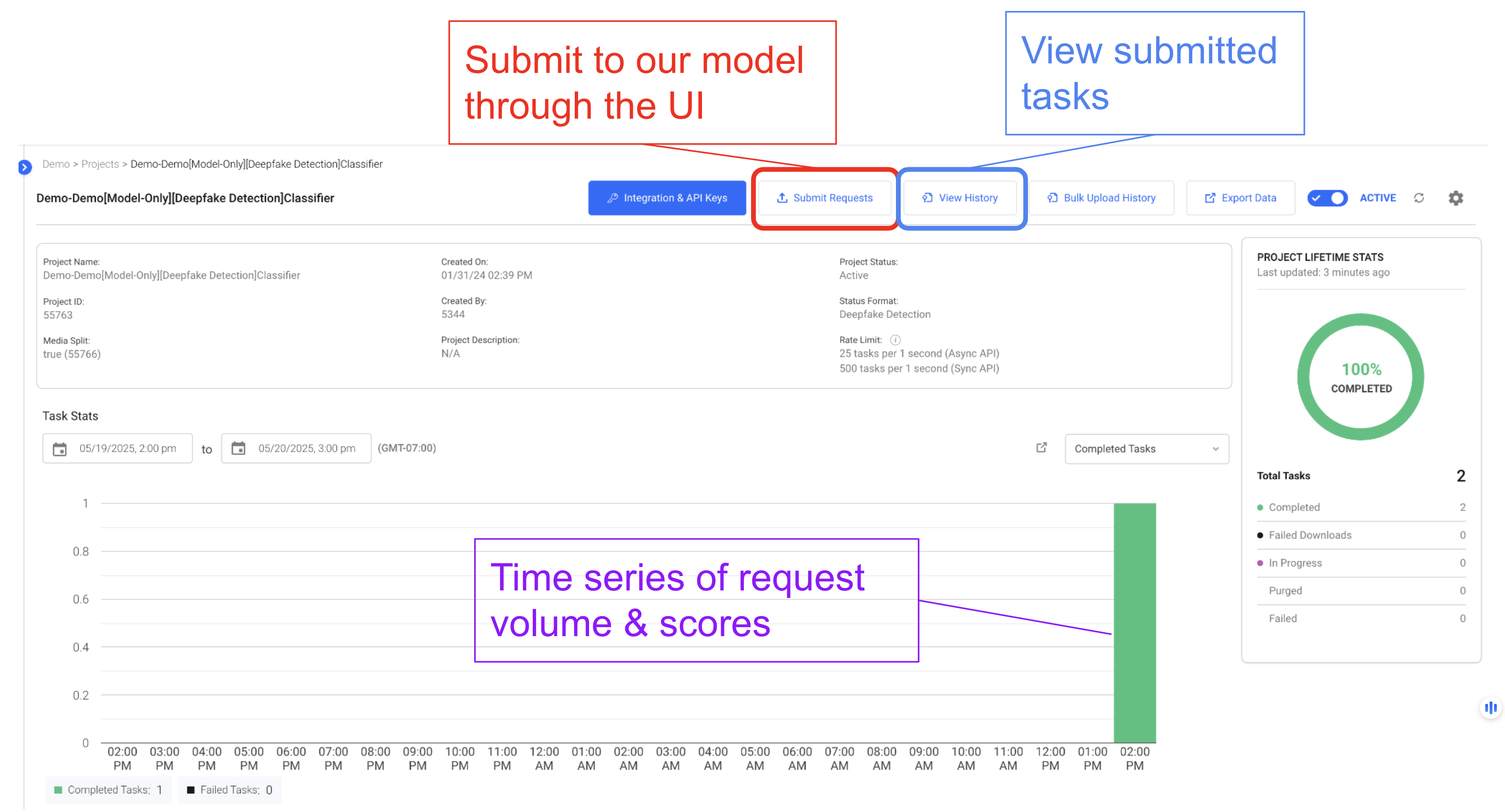

Submitting Tasks to the API

When submitting a moderation task, you’ll need to provide a public or signed URL for your visual content that is readable by Hive APIs. Alternatively, you can upload local media files by specifying the file path in the request. Supported file types include jpg, png, gif, webp, mp4, and webm. A full list is also available in our documentation here.

You can submit tasks to Hive API endpoints in two ways: synchronous submission and asynchronous submission.\

You can also submit tasks through our V2 Project UI:

Synchronous Submission

Synchronous endpoints are optimized for a continuous flow of tasks with low latency requirements. If you have real-time moderation needs, you’ll want to submit tasks synchronously - our API returns model outputs directly in the response message.

NOTE:For synchronous tasks including video, you should split the video into shorter segments and submit each segment as related tasks in order to minimize response latency. We recommend segments that are no more than 30 seconds long, but can handle segments up to 90 seconds.

Alternatively, you can sample frames (i.e., thumbnails) from your video content and submit those images synchronously.

To automate task submission, you can use the following code template to populate synchronous requests to Hive APIs with links to your visual content (e.g., in response to new posts) and process responses from Hive moderation models.

# Imports

import requests # Used to call most Hive APIs

# Inputs

vis_API_Key = 'your_visAPI_key' # The unique API Key for your visual project (located in your project dashboard).

def handle_hive_response(response_dict):

# Parse model response JSON and use model results for moderation.

# See a basic example implementation in the last section.

pass

def moderate_post_sync(content_url):

# Example Request (visual, synchronous endpoint, content hosted at URL):

headers = {'Authorization': f'Token {vis_API_Key}'} # Example for visual moderation tasks

data = {'url': content_url} # This is also where you would add metadata if desired

model_response = requests.post('https://api.thehive.ai/api/v2/task/sync', headers=headers, data=data)

response_dict = model_response.json()

handle_hive_response(response_dict)Generally, the API will return a response to the request endpoint within 500ms for a thumbnail image and within 10 seconds for a 30-second video segment.\

Asynchronous Submission

Asynchronous submission is preferable for large numbers of concurrent tasks or larger files such as long videos. When submitting tasks asynchronously, you must provide a callback URL (e.g., a webhook) to which the API can send a POST request once results are available. More information and example syntax for asynchronous submissions is available here.

Upon receiving an asynchronous task, the Hive API will send an immediate acknowledgement response with a task identifier and close the connection. When results are ready, the API will send a POST request including model responses to your provided callback URL. You can also call the API manually to trigger a callback for a completed task.

If you need guidance to determine which submission process is best for your use cases, please reach out to [email protected] - we’d be happy to assist you.

For both submission types, the response will be a JSON object that includes a task identifier and other task information, as well as model predictions.\

Response

Hive APIs do not remove your content or ban users itself. Rather, the Hive API will return classification metrics from our models that detail the type(s) of sensitive subject matter in the submitted content and our confidence that type of subject matter is there. You can then decide what actions to take based on your sensitivity and content policies.

To see what this might look like for your moderation needs, it may be helpful to walk through an example model response.\

Model Response Format & Confidence Scores

Hive’s visual classifier includes a set of submodels, called model heads, that each identify different types of sensitive visual subject matter—for example, explicit content, weapons, or drugs. The model response includes predictions from each model head as a confidence score between 0 and 1 for each class, which correlates with the probability that the image belongs in the class predicted by the model.

For simplicity, let’s consider a smaller example model with two heads: one for identifying sexual (or “NSFW”) content, and another for identifying guns. Suppose the “sexual” model head has two positive classes: general_nsfw (indicating explicit sexual content); general_suggestive (indicating suggestive content without nudity, underwear, etc.); and a negative class general_not_nsfw_not_suggestive (clean).

Similarly, suppose the “gun” model head has three positive classes: gun_in_hand (person holding a gun); animated_gun (e.g., guns appearing in video games); gun_not_in_hand (gun identified, but not being held or used); and a negative class no_gun (clean).

For this model, an example of the "output" object in the API response for an image would look like this:

{

"output": [

{

"time": 0,

"classes": [

{

"class": "general_nsfw",

"score": 0.94

},

{

"class": "general_suggestive",

"score": 0.05

},

{

"class": "general_not_nsfw_not_suggestive",

"score": 0.05

},

{

"class": "gun_in_hand",

"score": 0.88

},

{

"class": "animated_gun",

"score": 0.04

},

{

"class": "gun_not_in_hand",

"score": 0.04

},

{

"class": "no_gun",

"score": 0.04

}

]

}

]

}In this example, our hypothetical model is very confident (0.94) that explicit sexual content is in the input image, and reasonably confident (0.88) that at least one person in the input image is holding a gun. Note that confidence scores for each model head are generated independently, and confidence scores for all positive classes and the negative class sum to 1.

NOTE:For clarity, this example truncated many of the classes and scores that would appear in an actual response object. A real API response is longer but takes the same format. You can see a full list of classes recognized by Hive’s visual models here. If needed, a more detailed description of our classes is available in these subpages

Video and Timestamps

Our API will send a model response object for each image (i.e., task) you submit. For images, including thumbnail images extracted from a video, time is set to 0 by default.

For video tasks, our backend will split the video into representative frames, run the visual model on each frame, and deliver a single model response object that combines the results for each frame. Our default sampling rate for video is 1FPS, but this can be increased if needed. Here, the model response object will contain scores for each class on each sampled frame, and time will be a timestamp for when that frame was captured.\

What To Do With Model Results

Once you have decided on a content policy and what actions you want to take, you can design custom moderation logic that implements your policy based on scores pulled from the model response object.

Continuing from our simplified example, here’s a simple example that defines restricted classes and then flags content if scores for one of those classes exceeds a threshold score of 0.9.

In this example, we’ll exclude animated_gun from our list of restricted classes so that model responses including an above-threshold confidence score for this class do not trigger moderation actions. This might reflect a content policy that's ok with animated weapons like those appearing in video games.

# Inputs

vis_threshold = 0.9 # Confidence score threshold

banned_classes = ['general_nsfw','general_suggestive', 'gun_in_hand','gun_not_in_hand']

# A selection of classes to moderate based on the example response above.

def handle_hive_response(response_dict):

scores_dict = {x['class']:x['score'] for x in response_dict['status'][0]['response']['output'][0]['classes']}

# For a video input, you may want to iterate over timestamps for each frame and take the max score for each class.

for class_i in banned_classes:

if scores_dict[class_i] >= vis_threshold:

print('We should ban this guy!')

# Threshold in restricted class exceeded; call your actual moderation actions here

print(str(class_i) + ': ' + str(scores_dict[class_i]))

breakBasic example implementation for processing Hive visual model response for an image. Not intended for production use.

Generally, a threshold model confidence score of 0.90 is a good starting point to flag sensitive content in a positive class, but you can adjust based on how the model performs on a natural distribution of your data.

NOTE:Since some model heads have more than one positive class, an alternative approach could be to compare the negative (clean) class to a low threshold instead. For example, a check to see if no_gun scores below 0.1 is equivalent to checking if scores from all positive “gun” classes sum to greater than 0.9. This approach may be preferable if you are more concerned with recall (i.e., minimizing false negatives) than precision.

This moderation logic is, of course, fully customizable. When thinking about how to implement your content policy, you can consult our list of supported classes to decide which classes to monitor.

You are free to define different thresholds for different classes, or build in different moderation actions for different classes depending on severity. For example, you may decide to simply tag or age-restrict content that scores highly in the general_suggestive class but delete content that scores highly in the general_nsfw class.\

Final Thoughts

We hope that this guide has been a helpful starting point for thinking about how you might use Hive APIs to build automated enforcement of content policies and community guidelines on your platform. If any questions remain, we are happy to help you in designing your moderation solutions. Please feel free to contact us at [email protected] or [email protected].

Updated 2 months ago