Visual Moderation - Overview

Introduction

V3 (Demo) vs V2 (Enterprise) at a glance

- V3 API — easiest to try today in the Playground.

For developer testing ONLY: 100 requests/day limit. Great UI for testing and proofs-of-concept.

Note: Visual Moderation requires an annual contract. Smaller customers should use our self-serveVLM. - V2 API — Enterprise project with dedicated API keys, higher limits, and additional stats.

Try the Playground now. See the API tab there for code samples.Need higher volume? Contact us and we’ll increase your V3 limits or create an Enterprise project.

How Visual Moderation works

Hive's Visual Moderation classifies an entire image or video into different categories and assigns a confidence score to each class. Our model is multi-headed, where each head contains a set of model classes with scores that sum to 1.

For example, given these two Visual Moderation heads:

NSFW: general_nsfw, general_suggestive, general_not_nsfw_not_suggestive

Guns: gun_in_hand, animated_gun, gun_not_in_hand, no_gun

The confidence scores for each model head sum to 1.

{

"output": [

{

"time": 0,

"classes": [

{ "class": "general_nsfw", "score": 0.9 },

{ "class": "general_suggestive", "score": 0.05 },

{ "class": "general_not_nsfw_not_suggestive", "score": 0.05 },

{ "class": "gun_in_hand", "score": 0.88 },

{ "class": "animated_gun", "score": 0.04 },

{ "class": "gun_not_in_hand", "score": 0.04 },

{ "class": "no_gun", "score": 0.04 }

]

}

]

}

Quickstart (V2)

An example cURL request for this API is shown below. For more information, see the V2 Sync API and Async API guides.

# Submit a task with an image URL (sync)

curl --request POST \

--url https://api.thehive.ai/api/v2/task/sync \

--header 'accept: application/json' \

--header 'authorization: Token <API_KEY>' \

--form 'url=http://hive-public.s3.amazonaws.com/demo_request/gun1.jpg'

# Submit a task with a local file (sync)

curl --request POST \

--url https://api.thehive.ai/api/v2/task/sync \

--header 'Authorization: Token <API_KEY>' \

--form 'media=@"<absolute/path/to/file>"'

If integrating with V3 API, check out the API tab on the Playground (default limit 100/day).

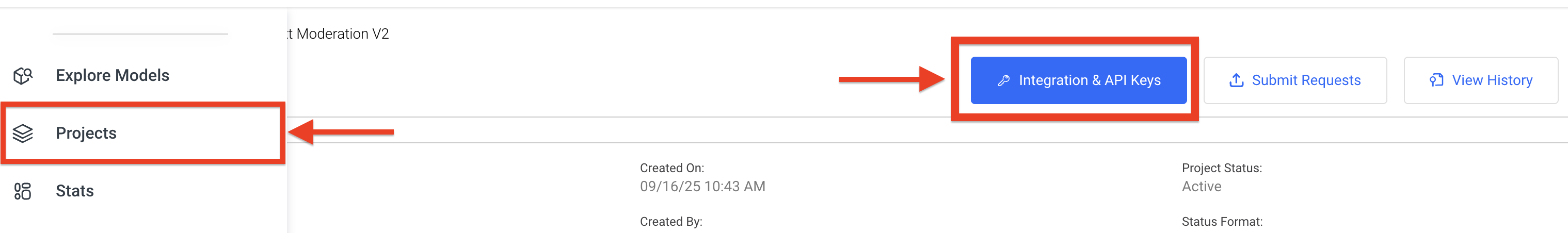

To find your API key, go to your dedicated projects, find the Visual Moderation project, and click the "Integration & API Keys" button.

For a more comprehensive API integration guide, visit this guide.

Inputs & Streams

Images & video: Hive splits videos into individual image frames, runs the model per frame, and aggregates results. The API response is similar for both images and videos, but for videos we include multiple timestamps.

Livestreams (RTMP/HLS): Submit a live stream URL, and we'll sample (default 1 FPS) and process frames. You can also send short clips (≤ 30s) to both our Visual Moderation and Audio Moderation models.

See our stream submission guide for more information.

Note: Contact us to have streams allocated to your project before use.

Visual Moderation Heads & Classes

Hive's visual classification models support a wide variety of classes that are relevant to content moderation. Broadly, visual moderation classes can be separated into five main categories: sexual content, violent imagery, drugs, hate imagery, and image attributes. When deciding how to process our API response in order to implement your content policy, you should consult the following class descriptions to decide which classes to moderate.

NOTE:This page simply lists supported visual moderation classes and gives a brief description. For a more detailed breakdown of subject matter flagged by each class, click the class name or go to the Class Descriptions subpages.

Note: Older versions of the API might not perfectly match the outline below. Please reach out to [email protected] if you would like to access the latest content moderation classes.

Sexual

- general_nsfw - genitalia, sexual activity, nudity, buttocks, sex toys, animal genitalia

- general_suggestive - shirtless men, underwear / swimwear, sexually suggestive poses without genitalia, occluded or blurred sexual activity

- general_not_nsfw_not_suggestive - none of the above, clean

- yes_sexual_activity - a sex act or stimulation of genitals are present in the scene

- no_sexual_activity - no sex act is present in the scene

- yes_realistic_nsfw - live nudity, sex acts, or photo-realistic representations of nudity or sex acts

- no_realistic_nsfw - non-photorealistic representations of nudity or sex acts (statues, crude drawings, paintings etc.); lack of any NSFW content

- yes_female_underwear - lingerie, bras, panties

- no_female_underwear

- yes_bra - standard bras, including ones worn under sheer clothing

- no_bra

- yes_panties - women's underwear, including boyshorts and thongs

- no_panties

- yes_negligee - negligee, chemises, and other sheer, nightgown-type garments

- no_negligee

- yes_male_underwear - fruit-of-the-loom, boxers

- no_male_underwear

- yes_sex_toy - dildos, certain lingerie

- no_sex_toy

- yes_cleavage - identifiable female cleavage

- no_cleavage

- yes_female_nudity - breasts or female genitalia

- no_female_nudity

- yes_male_nudity - male genitalia

- no_male_nudity

- yes_female_swimwear - bikinis, one-pieces, not underwear

- no_female_swimwear

- yes_bodysuit - bodysuits that do not cover the thigh, including corsets

- no_bodysuit

- yes_miniskirt - skirts that end above the mid-thigh

- no_miniskirt

- yes_sports_bra - sports bras, bralettes, bandeaus, and other bra-like clothing

- no_sports_bra

- yes_sportswear_bottoms - bottoms worn during exercise

- no_sportswear_bottoms

- yes_bulge - penises that are visible underneath clothing

- no_bulge

- yes_breast - female breasts where nipples or areolas are visible

- no_breast

- yes_genitals - human genitals (vulvas, penises, testicles)

- no_genitals

- yes_butt - exposed butts

- no_butt

- kissing - mouth-to-mouth contact as well as cheek and forehead kisses

- licking - mouth-to-body contact of any kind, including oral sex

- no_tongue

- yes_male_shirtless - shirtless below mid-chest

- no_male_shirtless

- yes_sexual_intent - occluded, blurred, or hidden sexual activity

- no_sexual_intent

- yes_undressed - a subject is nude/unclothed, even if genitals etc. are not visible due to pose or digital overlay, or are covered by an object

- no_undressed - underwear, swimwear, shirtless men if not evident they are nude

- animal_genitalia_and_human - sexual activity including both animals and humans

- animal_genitalia_only - animals mating and pictures of animal genitalia

- animated_animal_genitalia - drawings of sexual activity involving animals

- no_animal_genitalia - none of the above, clean

Violence

- gun_in_hand - person holding rifle, handgun

- gun_not_in_hand - rifle, handgun, not in hand

- animated_gun - gun in games, cartoons, etc. can be in-hand or not.

- no_gun

- knife_in_hand - person holding knife, sword, machete, razor blade

- knife_not_in_hand - knife, sword, machete, razor blade, not in hand (outside of culinary settings)

- culinary_knife_not_in_hand - culinary knives not being held or handled by a person

- culinary_knife_in_hand - knife being used for preparing food

- no_knife

- very_bloody - gore, visible bleeding, self-cutting

- a_little_bloody - fresh cuts / scrapes, light bleeding

- other_blood - animated blood, fake blood, animal blood such as game dressing

- no_blood - minor scabs, scars, acne, etc. are not considered ‘blood’ by model

- hanging - the presence of a human hanging by noose (dead or alive)

- noose - a noose is present in the image with no human hanging from it

- no_hanging_no_noose - no person hanging and no noose present

- human_corpse - human dead body present in image

- animated_corpse: animated dead body present in image

- no_corpse

- yes_emaciated_body - emaciated human or animal body present in image

- no_emaciated_body

- yes_self_harm - self cutting, burning, instances of suicide or other self harm methods present in image

- no_self_harm

- yes_animal_abuse - animals being beaten, tortured, or treated inhumanely as well as animals with graphic injuries

- no_animal_abuse

- yes_fight - two or more people engaging in a physical fight

- no_fight

- yes_child_safety - shirtless child 11 years old or younger present in the image

- no_child_safety

Drugs and other vices

- yes_pills - pills and / or drug powders

- no_pills - no pills and / or drug powders

- illicit_injectables - heroin and other illegal injectables

- medical_injectables - injectables for medical use

- no_injectables - no injectable drug paraphernalia

- yes_smoking - cigarettes, cigars, marijuana, vapes, or other smoking paraphernalia

- no_smoking - no cigars, marijuana, vapes, or other smoking paraphernalia

- yes_marijuana - marijuana or marijuana-related paraphernalia

- no_marijuana

- yes_gambling - depicts gambling activity like slot machines, casino games, sports betting, or lottery where betting is visible or implied

- no_gambling - no gambling activity, regular card/dice games or competitive games with no evidence of betting

- yes_drinking_alcohol - depicts alcoholic beverages being consumed

- yes_alcohol - depicts alcoholic beverages, present but not being consumed

- animated_alcohol - depicts alcoholic beverages in animated movies, cartoons, or art

- no_alcohol - does not depict alcoholic beverages or identifiable alcohol use

Hate

- yes_nazi - Nazi symbols

- no_nazi - absence of the above

- yes_terrorist - ISIS flag

- no_terrorist - absence of the above

- yes_kkk - KKK symbols

- no_kkk - absence of the above

- yes_confederate - shows the Confederate "stars and bars," including graphics, clothing, tattoos, and spinoff flags

- no_confederate - absence of the above

- yes_middle_finger - middle finger

- no_middle_finger - absence of the above

Other Attributes

- text - any form of text or writing is present somewhere on the image

- no_text - no text present in the image

- yes_overlay_text - digitally overlaid text is present on an image (think meme text)

- no_overlay_text - lack of digitally overlaid text in the image

- yes_child_present: a baby or toddler is present in the image

- no_child_present

- yes_religious_icon: a religious icon is present in this image

- no_religious_icon

- yes_drawing: a drawing, painting, or sketch is the central part of the image

- no_drawing

- animated - the image is animated

- hybrid - the image is partially animated

- natural - the image has no animation

- yes_qr_code - the image contains a QR code

- no_qr_code - the image does not contain a QR code

Brand Safety & Suitability - GARM taxonomy

Hive's Brand Safety and Brand Suitability APIs are powered by Hive's visual moderation model and are additionally mapped to the GARM Brand Safety & Suitability Framework (Global Alliance for Responsible Media), which was established as an industry-standard for categorizing harmful content. For more information click here.

Request Format (V2)

The request format for this API includes a field for the media being submitted, either as a local file path or as a url. For more information about submitting a task, see our API reference guides to synchronous and asynchronous submissions.

# Submit a task with an image URL

curl --request POST \

--url https://api.thehive.ai/api/v2/task/sync \ # this is a sync example, see API reference for async

--header 'accept: application/json' \

--header 'authorization: token <API_KEY>' \

--form 'url=http://hive-public.s3.amazonaws.com/demo_request/gun1.jpg'

# Submit a task with a local file

curl --request POST \

--url https://api.thehive.ai/api/v2/task/sync \ # this is a sync example, see API reference for async

--header 'Authorization: Token <token>' \

--form 'media=@"<absolute/path/to/file>"'To get started with V3, check out our Playground and go to our "API" tab for code samples.

Choosing Thresholds for Visual Moderation

Note: V3 returns the same class names and confidence scores as V2, so your threshold logic stays unchanged.

For each of the classes mentioned above, you will need to set thresholds to decide when to take action based on our model results. For optimum results, a proper threshold analysis on a natural distribution of your data is recommended (for more on this please contact Hive at the email below). Generally, though, a model confidence score threshold of >.90 is a good place to start to flag an image for any class of interest.

For questions on best practices, please message your point of contact at Hive or send a message to [email protected] to contact our API team directly.

Supported File Types

Image Formats:

gif

jpg

png

webp

Video Formats:

mp4

webm

avi

mkv

wmv

mov

Thresholds

We recommend a threshold of 0.9 for optimized model performance.

We recommend that you start off with these thresholds, but you should always refine these thresholds to suit your specific use case.

Updated 8 days ago