CSE Text Classifier API

This model is available to Enterprise customers only. Contact us to enable access for your organization.

Overview

Hive's CSE Text Classifier API, which was created in partnership with Thorn, detects suspected child sexual exploitation (CSE) in both English and Spanish. This classifier complements our CSAM detection suite by filling a critical content gap for use cases such as detecting text-based child sexual exploitation across user messaging and conversations, allowing us to provide customers with even broader detection coverage across text, image, and video.

Each text sequence submitted is tokenized before being passed into the text classifier, with the maximum input size being 1024 characters. The classifier then returns the text sequence’s scores (between 0 and 1, inclusive) for each of the possible labels.

Labels

There are seven possible labels:

csa_discussion: This is a broad category, encompassing text fantasizing about or expressing outrage toward child sexual abuse (CSA), as well as text discussing sexually harming children in an offline or online setting.child_access: Text discussing sexually harming children in an offline or online setting.csam: Text related to users talking about, producing, asking for, transacting in, and sharing child sexual abuse material (CSAM).has_minor: Text where a minor is unambiguously being referenced.self_generated_content: Text where users are talking about producing self-generated content, offering to share their self-generated content with others, or generally talking about self-generated images and/or videos.sextortion: Text related to sextortion, which is where a perpetrator threatens to spread a victim’s intimate imagery in order to extort additional actions from them. This encompasses messages where an offender is sextorting another user, users talking about being sextorted, as well as users reporting sextortion either for themselves or on behalf of others.not_pertinent: The text sequence does not flag any of the above labels.

Response

If any of the above labels (with the exception of not_pertinent) receive a score that is above their internally set threshold, the label will be returned in the pertinent_labels section of the response. If not_pertinent scores above the threshold, pertinent_labels will be empty.

A given text sequence might receive high scores across multiple labels. In these cases, it may be helpful to combine the label definitions to better understand the situation at hand and determine what cases are actionable with regard to your moderation team’s specific policies. For instance, text sequences scoring high on both CSAM and Child Access may be from individuals potentially abusing children offline and producing CSAM.

To see an annotated example of an API response object for this model, you can visit its API reference page.

Integrating with the CSE Text Classifier API

Each Hive model (Visual Moderation, CSE Text Classifier, etc.) lives inside a “Project” that your Hive rep will create for you.

- The CSE Text Classifier Project comes with its own API key—use it in the Authorization header whenever you call our endpoints.

- If you use other Hive models, keep their keys separate, even though the API URL is the same.

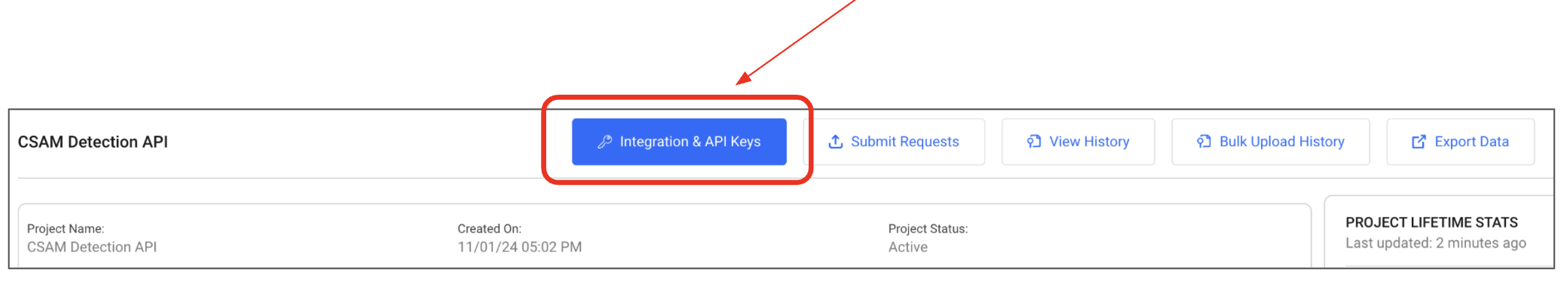

- Tip: In the Hive dashboard, open Projects → find your CSE Text Classifier Project → click Integration & API Keys to copy the correct key.

Updated 3 months ago