CSAM Detection - Hash Matching API

Integrate with our CSAM Detection API for both images and videos

Deprecated EndpointSoon, we will no longer be offering separate endpoints for our Hash Matching and Classifier CSAM detection models. Moving forward, customers should use our combined endpoint.

Overview

Hive's partnership with Thorn allows Hive customers to easily integrate Thorn's API to match against other known CSAM (Child Sexual Abuse Material) or SE (Sexual Exploitation) examples in Thorn's database. Images and videos are both accepted.

The image is first hashed and then matched against an index of known CSAM. All matching images in the database will be sent back along with the matchDistance which indicates the dissimilarity between the source and the target media. Videos are matched using two methods. The MD5 hash checks whether the whole video is known CSAM content. The videosaferhash checks each scene (described in further detail below) in the video.

Note: Videos will always be billed at 1FPS in this endpoint.

Response

In the case of a sent image, the response will return the matches in the database in the hashes key of the response. If this key does not exist, then there were no matches. Each item in the hashes JSON response, corresponds to the different hashes used, and the match associated with the hash.

In the case of a sent video for the md5 hash, the behavior is the same as for image.

In the case of videoSaferhashv0 hash matching scenes will be returned. The scene is a startTimestamp and an endTimestamp that indicate the portion of the sent video that matches the known images in CSAM database.

To see an annotated example of an API response object for this model, you can visit our API Reference

Supported File Types

Image Formats:

gif

jpg

png

Video Formats:

mp4

mov

Integrating with the CSAM Detection API

Each Hive model (Visual Moderation, CSAM Detection, etc.) lives inside a “Project” that your Hive rep will create for you.

- The CSAM Detection Project comes with its own API key—use it in the Authorization header whenever you call our endpoints.

- If you use other Hive models, keep their keys separate, even though the API URL is the same.

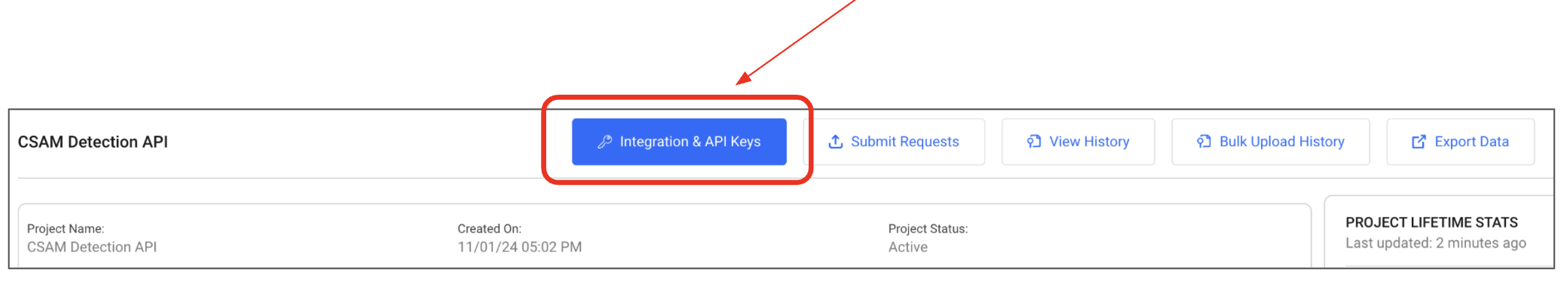

- Tip: In the Hive dashboard, open Projects → find your CSAM Detection Project → click Integration & API Keys to copy the correct key.

Updated 3 months ago