Image and Video Detection

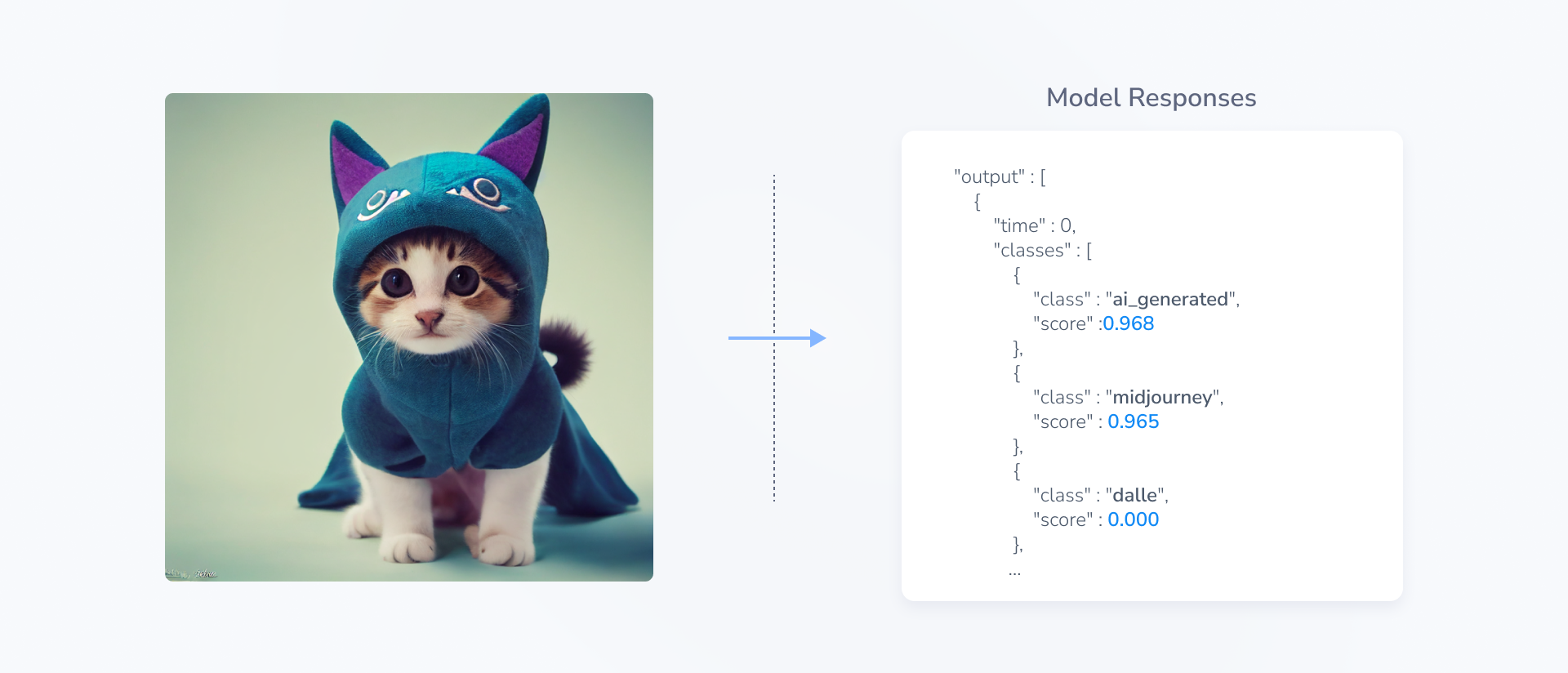

Hive's AI-Generated Image and Video Detection API is a single endpoint that runs two separate models: one for detecting images generated by an AI engine such as Midjourney, DALL-E, or Firefly and one for detecting deepfakes, or images in which AI has been used to map one person's face onto another's. Their combined output is returned as a list of classes.

AI-Generated Image and Video and Deepfake Detection

Given an input image or video, Hive's AI-Generated Image and Video Detection model determines whether or not the input is entirely AI-generated. The model was trained on a large dataset comprising millions of artificially generated images and human-created images such as photographs, digital and traditional art, and memes sourced from across the web. Our response returns not only whether or not an image is AI-generated, but also which image synthesis model created it. Confidence scores are provided for each classification for easy interpretation of results. This model helps customers protect themselves from the potential misuse of AI-generated and synthetic content. For example, it can flag and remove AI-generated content on Internet social platforms, or prevent fraud in the insurance claims process by identifying evidence with AI-generated augmentations.

Given an image or video input, Hive’s Deepfake Detection model identifies whether or not the input is a deepfake. Identifying and removing deepfakes across online platforms is crucial to limit not only the significant harm they can cause to those who appear in them but also the misinformation, fraud, and digital sexual assault that they enable. Our Deepfake Detection model works by first detecting each face within the image. It then performs a classification step on each face to determine whether or not those representations are deepfakes. Each face is assigned a confidence score. In the response, the model returns the highest confidence score overall, which is used to determine if the entire input is a deepfake.

Response

The AI-Generated Image and Video Detection model has two heads:

Generation classification: ai_generated, not_ai_generated

Source classification: sora, pika, haiper, kling, luma, hedra, runway, hailuo, mochi, flux, hallo, hunyuan, recraft, leonardo, luminagpt, var, liveportrait, mcnet, pyramidflows, sadtalker, aniportrait, cogvideos, makeittalk, sdxlinpaint, stablediffusioninpaint, bingimagecreator, adobefirefly, lcm, dalle, pixart, glide, stablediffusion, imagen, amused, stablecascade, midjourney, deepfloyd, gan, stablediffusionxl, vqdiffusion, kandinsky, wuerstchen, titan, ideogram, sana, emu3, omnigen, flashvideo, transpixar, cosmos, janus, dmd2, switti, 4o, grok, wan, infinity, veo3, imagen4, krea, moonvalley, higgsfield, gemini, reve, heygen, sora2, seedream, seedance, grokimagine, gemini3, mai, lucid, sanavideo, flux2, qwen, zimage, viduq2, bria, blip3o, pixverse, ovis, longcat, cogview, bagel, ray3, gptimage1_5, hidream, steadydancer, ltx, personalive, dreamid, scail, meta, vibe, imagineart, seedance2, other_image_generators (image generator other than those that have been listed), inconclusive, inconclusive_video (no video source identified), or none (media is not AI-generated)

The confidence scores for each model head sum to 1.

The first head gives a binary classification for all images, identifying whether or not they were AI generated and the accompanying confidence score. The second head provides further details as to the image's source, with support for the most popular AI art generators currently in use. If the model cannot identify a source, it will return none under the source head.

Additionally, if applicable, the model returns an image's C2PA metadata, which provides information about an image's origin (e.g., which generative model created it). If an image contains C2PA metadata, this field will be non-empty. As an image's metadata can be stripped or falsified, we recommend that customers view the entire API response holistically.

The Deepfake Detection model's response is contained within a single class: deepfake. Each detected face in the input is assigned a confidence score between 0 and 1, inclusive. The model takes the highest confidence score overall and returns it within the response. If the highest score is closer or equal to 0, the entire input is most likely not a deepfake. If the highest score is closer or equal to 1, the entire input is most likely a deepfake.

To see a complete API response object, you can visit the API reference page. If you are interested in the legacy Deepfake Detection-only API response, you can visit its separate API reference page.

Supported File Types

Image Formats:

gif

jpg

png

webp

Video Formats:

mp4

webm

avi

mkv

wmv

mov

Thresholds

We recommend the following thresholds for optimized model performance:

- AI-Generated Image: 0.9

- AI-Generated Video: 0.9 on any frame

- Deepfake Image: 0.9

- Deepfake Video: 0.5 on two consecutive frames, or 5% of all frames

We recommend that you start off with these thresholds, but you should always refine these thresholds to suit your specific use case.

Updated 6 days ago