Using Text Moderation API

Welcome to Hive's Text Moderation! This guide will help you configure your project, integrate our API, and understand our model responses.

Thanks for choosing Hive for your moderation needs - we’re thrilled to have you on board! The purpose of this guide is to show you how to set up a Hive text moderation project, how to submit classification tasks to the Hive API, and how you might process our model responses to design moderation logic around our text model predictions.

Note: If you're just evaluating our Text Moderation API, you can start instantly with theV3 Playground (100 req/day limit) and upgrade to a V2 Enterprise project when you need higher volume.

If you've already spoken to our Sales team, this is the guide you'll follow to integrate your project.

First Steps (Enterprise)

To get started with Hive's Text Moderation, you’ll need to have a V2 Enterprise project. Note that V2 projects for each moderation model are separate from each other.

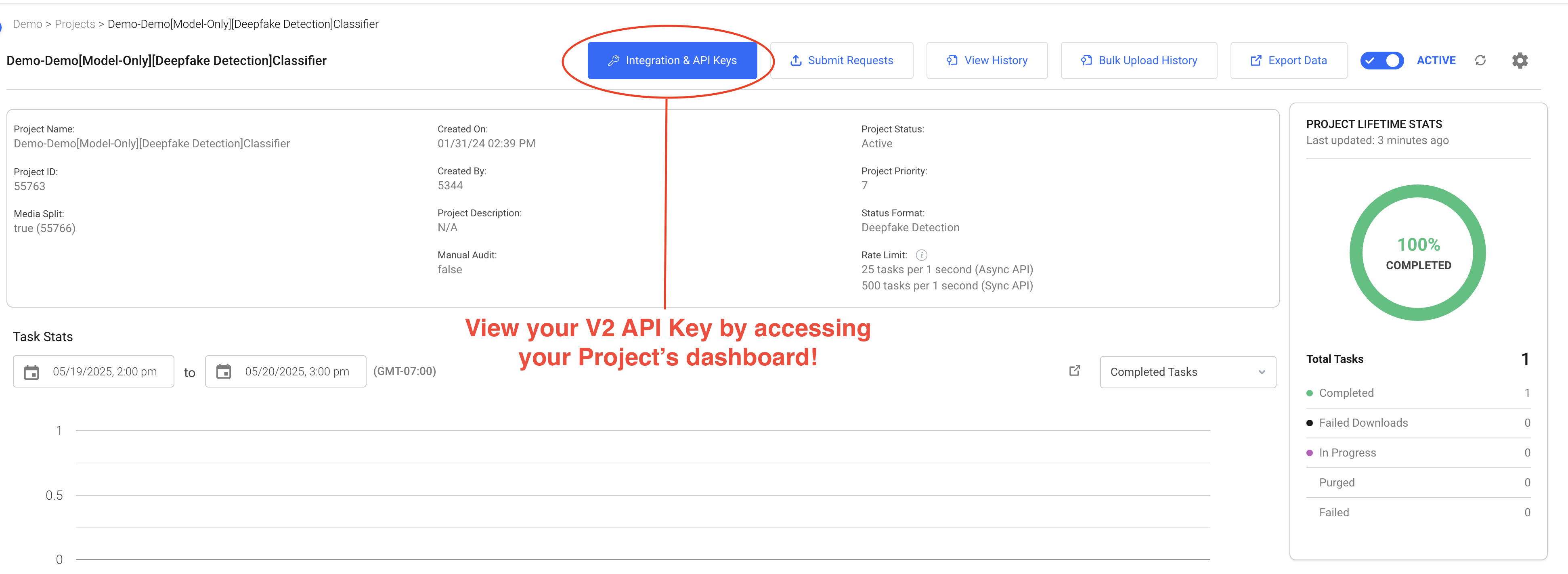

Once you have created a text moderation project with Hive, log into your project dashboard in order to access your API Key. The API Key is a unique authorization token for your project’s endpoint, and you will need to include the API Key as a header in each request made to the API in order to submit tasks for classification.\

Example API Key accessible from the Hive project dashboard\

Tasks are billed to whichever project they are submitted under, so protect your API Key like you would a password! If you have multiple Hive projects, the API Key for each endpoint will be unique.

Submitting Tasks

Input

When submitting a moderation task, you’ll need to provide raw text sourced from your content as an input to the Hive API. Externally hosted content such as links to comments and posts are not readable by the text moderation API.

NOTE:The default maximum length for text submissions is 1024 characters. If you need to analyze longer blocks of text, you can split the input text (e.g., using punctuation or spacing) across multiple related submissions.

Submission

You can submit tasks to Hive API endpoints in two ways: synchronously or asynchronously. This section will focus on synchronous submission, which is preferable for most text moderation users.

Synchronous submission is optimized for real-time moderation needs. If you have a constant stream of tasks and need responses quickly, you’ll want to use synchronous submission - the Hive API will return model outputs directly in the response message.

Once you’ve extracted raw text from your content, you can use the following code template to populate requests to the Hive text moderation API with text strings programmatically and access model responses for analysis.

# Imports:

import requests # Used to call most Hive APIs

# Inputs:

API_Key = 'your_textAPI_key' # The unique API Key for your text project

def get_hive_response(input_text):

headers = {'Authorization': f'Token {API_Key}'}

data = {'text_data': input_text} # Must be a string. This is also where you would insert metadata if desired.

# Submit request to the synchronous API endpoint.

response = requests.post('https://api.thehive.ai/api/v2/task/sync', headers=headers, data=data)

response_dict = response.json()

return response_dict

def handle_hive_classifications(response_dict):

# Parse model response JSON for model classifications and use for moderation.

# See a basic example implementation in the last section.

pass

def handle_hive_patternmatch(response_dict):

# Parse model response JSON for pattern matches/text filters and use for moderation.

# See a basic example implementation in the last section

passExample functions to submit text in a task to Hive APIs and process results. See example implementations for handlehive_classifications_and handle hive_patternmatch_later on.\

For synchronous requests, the Hive API will generally return a response within 100 ms for a typical short message string and within 500 ms for a max length (1024 character) submission.

NOTE:If you are planning to submit large numbers of tasks concurrently, asynchronous submission may be more suitable. In this case, you’ll also need to provide a callback URL (e.g., webhook) to which the API can send a POST request once results have finished processing. Syntax for submitting tasks asynchronously and more info is available on our API reference. If you need help determining the best way to submit your volume, please feel free to contact [email protected].

Response

Hive APIs do not remove your content or ban users itself. Rather, the Hive API will return classification metrics from our models as a JSON object that details the type(s) of sensitive subject matter in the submitted text and a score indicating severity. You can use this to take appropriate actions based on your content policies.

To see what this might look like for your moderation needs, it may be helpful to walk through an example model response.

Model Response Format & Severity Classifications

For moderation purposes, you’ll want to focus on the classifications and severity scores for input text given in the model response object. Our text model can identify speech in five main classes:

- sexual - explicit sexual descriptions, suggestive or flirtatious language, sexual insults

- hate - slurs, negative stereotypes or jokes about protected groups, hateful ideology

- violence - descriptions of violence, threats, incitement

- spam - text such as links or phone numbers intended to redirect to another platform

- bullying - threats or insults against specific individuals, encouraging self-harm, exclusion

We also offer a promotions class upon request, which captures asking for donations, advertising products or services, soliciting for likes, follows, or shares etc.

Our model predictions will include a severity score for each class, a discrete integer ranging from 0 (clean or unrelated to the class) to 3 (most severe). For the spam and promotions classes, the score will be either 0 or 3 rather than a multi-level classification.

Here’s what the response portion of the object returned by the Hive API actually looks like - some fields have been truncated for clarity:

"response": {

.

.

.

"language": "EN",

"moderated_classes": [

"sexual",

"hate",

"violence",

"bullying",

"spam"

],

"output": [

{

"time": 0,

"start_char_index": 0,

"end_char_index": 110,

"classes": [

{

"class": "spam",

"score": 3

},

{

"class": "sexual",

"score": 2

},

{

"class": "hate",

"score": 0

},

{

"class": "violence",

"score": 0

},

{

"class": "bullying",

"score": 0

}

]

}

]

}Our model assigns severity scores based on an interpretation of full phrases and sentences in context. As an example, the model would score text that includes a slur as 3 for hate, but text that references a negative stereotype without overtly offensive language as a 2.

Text moderation supports many commonly used languages, but the supported moderation classes for each language varies. If model classifications are not supported for a language detected in the text, the score for that class will be -1. You can find a full description of our current language support here.\

Profanity and Personal Identifiable Information (PII)

Hive models also include a pattern-matching feature that will search input text for pre-defined words, phrases, or formats and return any exact matches. This can complement model classifications to provide additional insights into your text content. Currently, profanity and personal information (PII) such as email addresses, phone numbers and addresses are flagged by default.

NOTE:You can also add custom rules for other words and phrases you’d like to monitor within the project dashboard, though some (e.g., slurs) will be picked up by classification results. Additionally, the project dashboard allows you to allowlist words or phrases that Hive flags by default. More information on setting up custom pattern-matching rules is available here.

Here’s what pattern-matching results will look like within the model response object (classification section truncated for clarity):

"response": {

"input": "..."

"custom_classes": [],

"text_filters": [

{

"value": "ASS",

"start_index": 107,

"end_index": 110,

"type": "profanity"

}

],

"pii_entities": [

{

"value": "[email protected]",

"start_index": 38,

"end_index": 57,

"type": "Email Address"

},

{

"value": " 617-768-2274.",

"start_index": 80,

"end_index": 94,

"type": "U.S. Phone Number"

}

],

.

.

.Pattern-matching results include indices representing the location of the match within the input string. You can use these indices to modify or filter your text content as desired.

What To Do With Our Results

Once you have decided on a content policy and what enforcement actions you want to take, you can design custom moderation logic that implements your policy based on severity scores pulled from the model response object.

Here’s a simple example of moderation logic that defines restricted classes and then flags text that scores at the highest severity in any of those classes. This might reflect a content policy that allows controversial text content (e.g., score 2) but moderates the most harmful content in each class.

# Inputs

severity_threshold = 3 # Severity score at which text is flagged or moderated, configurable.

restricted_classes = ['sexual','hate','violence','bullying','spam'] # Select classes to monitor

def handle_hive_classifications(response_dict):

# Create a dictionary where the classes are keys and the scores their respective values.

scores_dict = {x['class']:x['score'] for x in response_dict['status'][0]['response']['output'][0]['classes']}

for ea_class in restricted_classes:

if scores_dict[ea_class] >= severity_threshold:

print('We should ban this guy!')

# This is where you would call your real moderation actions to tag or delete posts, ban users etc.

print(str(ea_class) + ': ' + str(scores_dict[ea_class]))

breakWhen designing your enforcement logic, you will need to decide whether to moderate each class at level 1, level 2, or level 3 depending on your community guidelines and risk sensitivity.

IMPORTANT:Because all platforms have different needs, we strongly encourage you to consult our text model description as you consider which classes to moderate and at what severity. Here you’ll find descriptive examples of each severity level for each class. We’re happy to provide more guidance or real examples if you need them.

You can experiment with defining different thresholds for different classes, or build in different moderation actions for different classes depending on severity. For example, a dating platform with messaging capabilities might choose to allow sexual content scoring a 2 but moderate text that scores a 2 in other classes.

Here’s another simple function you can use to parse the response object for pattern-matching results and take action on any matches.

def handle_hive_patternmatch(response_dict):

pm_dict = {x['type']:x['value'] for x in response_dict['status'][0]['response']

if pm_dict = False

pass # Do nothing if text filters, PII entities, and custom classes are all empty

else

print('Filter match') # Insert your moderation logic here

for type_i in pm_dict

print(str(type_i) + ': ' + str(pm_dict[type_i]))This will do nothing if no text filters are triggered but can flag any exact matches, which you can use to take any desired moderation actions. In real moderation contexts, you may wish to combine pattern-matching results with additional insights about the user. For example, profanity can be moderated based on age, while certain PII entities can be moderated based on a number of similar messages posted.\

Final Thoughts

We hope that this guide has been a helpful starting point for thinking about how you might use Hive APIs to build automated enforcement of content policies and community guidelines on your platform. Also stay tuned as we announce additional features of our moderation dashboard, which can provide insight into individual user history to help inform moderation decisions.

If any questions remain, we are happy to help in designing your moderation solutions. Please feel free to contact us at [email protected] or [email protected].

Updated 2 months ago