CSAM Detection - Combined API

This model is available to Enterprise customers only. Contact us to enable access for your organization.

Overview

Hive's Combined CSAM Detection API runs two CSAM (Child Sexual Abuse Material) detection models, created in partnership with Thorn:

- Hash Matching: Capable of detecting known CSAM.

- Classifier: Capable of detecting novel CSAM.

This model can accept both images and videos as input.

Hash Matching

To detect CSAM through hash matching, the model first hashes the input image and then matches it against an index of known CSAM. All matching images in the database will be sent back along with the matchDistance which indicates the dissimilarity between the source and the target media.

The minimum image dimensions for hashing are 50 x 50.

If there is no match found, we will send this image to the classifier as well.

Classifier

The classifier works by first creating embeddings of the media. An embedding is a list of computer-generated scores between 0 and 1. After we create the embeddings, we permanently delete all of the original media. Then, we use the classifier to classify the content as CSAM or not based on the embeddings. This process ensures that we do not store any CSAM.

Within the response, the classifier makes predictions for three possible classes:

pornography: Pornographic media that does not involve childrencsam: Child sexual abuse materialother: Non-pornographic and non-CSAM media

For each class, the classifier returns a confidence score between 0 and 1, inclusive. The confidence scores for each class sum to 1.

The minimum image dimensions for classifier predictions are 100 x 100. If letterboxing has been applied to the image (i.e., black borders added to an image to preserve its aspect ratio), the borders will be removed.

If a classifierPrediction is returned as null, this means the request to the classifier failed. For the classifier, a request fails when the image is smaller than the minimum image dimensions after de-letterboxing.

Response

The endpoint returns both models' responses into a single, combined response. To see an annotated example of an API response object for this model, you can visit its API reference page.

Supported File Types

Image Formats:

jpg

png

Video Formats:

mp4

mov

Integrating with the CSAM Detection API

Each Hive model (Visual Moderation, CSAM Detection, etc.) lives inside a “Project” that your Hive rep will create for you.

- The CSAM Detection Project comes with its own API key—use it in the Authorization header whenever you call our endpoints.

- If you use other Hive models, keep their keys separate, even though the API URL is the same.

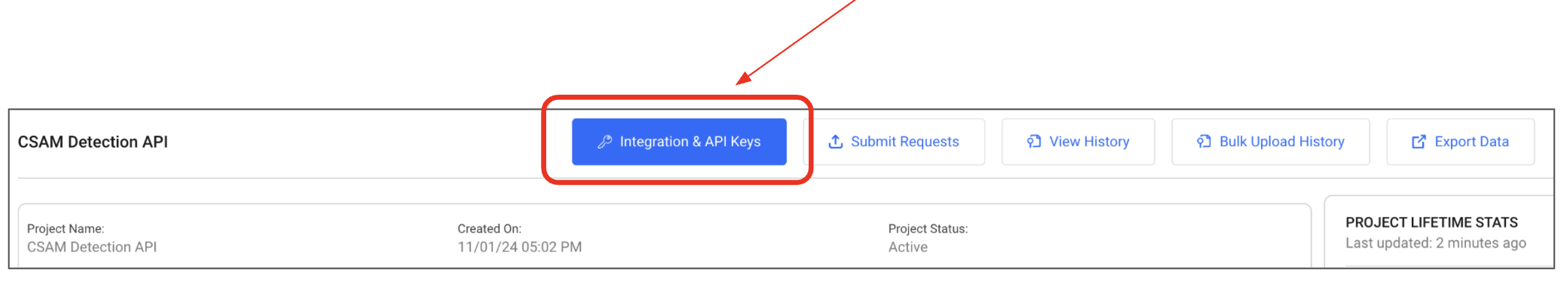

- Tip: In the Hive dashboard, open Projects → find your CSAM Detection Project → click Integration & API Keys to copy the correct key.

Updated 3 days ago